The end of human translation?

Who has not had to deal with, at some time or other, one of those supposedly automatic translations? Perhaps they are produced automatically but, to be sure, it is highly optimistic to refer to them as translations. The systems to date have had to face two enormous problems: context and meaning. Those who are au fait with these programmes, at best use them to understand the general sense of a text or to identify key words, or to get a rough version which clearly needs a lot of manual, i.e. human, translation. They are often the source of innumerable jokes and offer comfort to professional translators: « Do you now see how we will always be in demand? » And, just in case, examples of the mess created by automatic translations are quoted.

But things appear to be about to change. Google, in their presentation last 19 May of their sites and projects gave details, amongst other novelties, of their project known as machine translation systems.

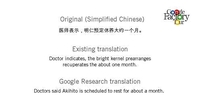

Based on a text written in Arabic the translation of which, using currently available technology, has been totally lacking in meaning, is now magically acceptable. What is behind this species of revolution? Something known as artificial intelligence.

Current automatic translators such as Systran or PowerTranslator need to be previously fed with prepared corpora or be equipped with special grammars for translation between the two languages being dealt with.

The new system carries out a wide-ranging statistical analysis of the documental corpora, some of the documents of which are translations, and involving structural elements of the text, the context, the situations where certain words appear, the frequency of words appearing, the terms used in each document, comparisons with frequencies in other documents and previous analyses.

Users are normally aware of the proverbial speed of the Google search and indexing engines. With a similar function in appearance - and known as real time -, when translating, the machine has lists of meanings, contexts as well as syntactic, morphological or semantic constructions, referring to a corpus of documents which is constantly expanding. The more documents digitalised, the more possibilities of achieving correct translations.

Are we, then, on the threshold of a revolution in the field of translation? Are we to pass by translators to become correctors and proofreaders of the texts generated by these systems? Who will have copyright on pre-existing documental corpora of a machine-generated translation? In the end, will it be the case that "we will always have literature, anyway"? How might technology of this nature change the world, a technology that can be even applied to voice recognition programmes, i.e. which will even produce cybernetic interpreters? Will Translation and Interpretation students have to recycle or be recycled? And what about the professional associations?